In Microsoft’s Mobile-first, Cloud-first vision (part 1) I mentioned to be VERY excited about Microsoft’s Mobile-first, Cloud-first vision based on Microsoft Azure services. This time I mention new Intune and Windows 10 features, partly based on Experts Live sessions.

Windows 10 is created as One product family running on One platform using One appstore. It's the platform for the new world, where focus is on cloud-based devices and management. Using one Windows offers the same user experience on multiple devices, with universal Windows applications running on it. To make things easier, big changes are coming to deploy and manage the Operating System.

Some notes on Windows 10 for Enterprise:

-Windows 10 can be managed as mobile OS fully in Microsoft Intune. No GPO's needed for that.

-Every Microsoft-based device, system or phone should be support Windows 10, that's the idea or message!

-No image management in Windows 10 is needed anymore. Just manage it like a mobile OS from now on.

-Windows is called Windows 10 because it's really a new OS which everyone is familiar with! It's the platform for the new world.

-Join Windows 10 with Azure Active Directory instead of using on-premises Directory Services. New way of thinking ;)

-New infrastructure model will like to be Azure with Active Directory, Azure RemoteApp, Enterprise Mobility Suite and Windows 10.

-ConfigMgr still can be used, but is not needed anymore. Hybrid with Intune is best option I guess!

To manage Windows 10 for Enterprise, Microsoft Intune can be used. Microsoft released a new Wave this week with new functionality:

New Microsoft Intune capabilities coming this week

-iOS & android App Wrapper (company apps)

-Per-App VPN (can be used per single app)

-Conditional Access Policies (differs per OS)

-Managed Mobile Apps (Intune MAM)

-Protected Browser Management (URL filtering)

-Bulk Device Enrollment (single service account used)

-Device Lock Down (Kiosk Mode)

-Allow & Deny Applications (blacklist/whitelist)

Finally you can say that Microsoft Intune is enterprise ready now! Let's have a look at some notes during this week:

-New features mentioned on TEE14 will be available now!

-New ConfigMgr hotfix makes Intune policies applying much faster. It can be downloaded here: Microsoft Support

-Intune Q4 updates on data/application containerization and wrapping.

-Intune and ConfigMgr hybrid features still not in sync. Would be great if this can be optimized in the future. You must wait multiple weeks/months for having same functionality in ConfigMgr!

-Windowsphone Feature Pack for 8.x devices which offers many improvements on management.

-Bulk enrollment, enterprise lock and application wrapper are finally there! Many people were waiting for it.

With Windows Phone Feature Pack for 8.x devices (as mentioned earlier) there will be:

-Richer Policy set (more then other vendors)

-Wifi, (trigger) VPN, Certificates push

-Encryption intelligence for SD cards

-Improved Application lifecycle management

-Improved Inventory

-Remote Lock, Password (PIN) reset

With these products coming and already there, the future looks bright for Microsoft. There is a new System Center (and ConfigMgr) coming, but nothing to hear about that. It's about Mobile-first, Cloud-first vision, which seems to be a new way for getting things done. Stay tuned for a next blogpost on my own experiences!

Thursday, November 27, 2014

Tuesday, November 25, 2014

Microsoft’s Mobile-first, Cloud-first vision (part 1)

On Tuesday November 18th I attended Experts Live in Ede (NL). This event based on new Microsoft technology is held once a year. It was full house with almost 800 people listening to around 50 speakers divided over more then 40 sessions. Most of sessions and opening keynote was about Microsoft Azure technology. Let's have a look at my sessions attended and lessons learned!

I did 2 sessions on Microsoft Azure, 2 on Microsoft Intune, 1 one Azure RemoteApp and 1 on Windows 10 for Enterprise. The message was Mobile-first, Cloud-first in keynote and almost all sessions. It's about bringing all systems and applications to the cloud, and manage it from there! On-premises is definitely not hot anymore. Not on Directory Services, not on System Center, not on Exchange, not on SQL, not on management and monitoring. Time to start working from a new perspective, let go what is and move to the cloud ;)

As seen on the Azure footprint, Microsoft Azure is growing rapidly. At the moment there are 19 Azure datacenter regions open for business already. Numbers are changing fast (weekly), but just for overview I include some of them:

-New Azure customers a week: >10.000

-SQL databases in Azure: 1.200.000

-Storage objects: >30 trillion

-Active Directory (AD) users: 350 million

-AD authentications a week: >18 billion

Hyperscale: over 300 services spanning compute, storage and networking supporting a wide spectrum of workloads!

Microsoft Azure is booming business, changing fast with resources scaling up daily. Payment is done on resources used, not on VM's which are turned off. Microsoft released a new Azure portal preview which looks awesome to me. There is Azure Resource Manager to create deployment templates (for example: deploy SQL 2014 Always-On servers with a single template) and Azure Operational Insights for online monitoring. Furthermore there is Windows Azure Pack for managing Private clouds, Azure RemoteApp for global access to business applications, and Enterprise Mobility Suite for mobile management.

Last but not least there is Windows Phone Feature Pack for 8.x devices, which offers a richer policy set (and many more), Microsoft Identity Manager offers self-service identity management for users, and Azure AD Application Proxy offers access to on-premises applications from the cloud. Microsoft is doing a great job here! On both Microsoft Azure and Microsoft Intune you can start a 30-day trial to have a look at functionality. Just start it today and decide what it can do for your business/organization.

Some notes on Azure and RemoteApp:

-At the moment there are 19 Azure datacenter regions open for business this month (growing rapidly).

-Over 300 services spanning Compute, Storage and Networking supporting a wide spectrum of workloads!

-The new Azure portal is ready for role-based access and can be used for admins and view-only access now.

-New Azure G-family (Godzilla) systems are coming, with 32 cores, 448 GB RAM and 6,5 TB local SSD maximum!

-Azure RemoteApp offers applications on both Windows, Mac OS X, iOS, or Android. Applications runs on Windows Server in the Azure cloud, where they are easier to scale and update. Users can access the applications remotely from their Internet-connected device.

-With Windows 10 coming as One product family, One platform and One store, management is or can be done fully from the cloud.

I'm VERY excited about Microsoft’s Mobile-first, Cloud-first vision, and hope to be part of it very soon. Stay tuned for a next blogpost on new Microsoft Intune and Windows 10 features!

I did 2 sessions on Microsoft Azure, 2 on Microsoft Intune, 1 one Azure RemoteApp and 1 on Windows 10 for Enterprise. The message was Mobile-first, Cloud-first in keynote and almost all sessions. It's about bringing all systems and applications to the cloud, and manage it from there! On-premises is definitely not hot anymore. Not on Directory Services, not on System Center, not on Exchange, not on SQL, not on management and monitoring. Time to start working from a new perspective, let go what is and move to the cloud ;)

As seen on the Azure footprint, Microsoft Azure is growing rapidly. At the moment there are 19 Azure datacenter regions open for business already. Numbers are changing fast (weekly), but just for overview I include some of them:

-New Azure customers a week: >10.000

-SQL databases in Azure: 1.200.000

-Storage objects: >30 trillion

-Active Directory (AD) users: 350 million

-AD authentications a week: >18 billion

Hyperscale: over 300 services spanning compute, storage and networking supporting a wide spectrum of workloads!

Microsoft Azure is booming business, changing fast with resources scaling up daily. Payment is done on resources used, not on VM's which are turned off. Microsoft released a new Azure portal preview which looks awesome to me. There is Azure Resource Manager to create deployment templates (for example: deploy SQL 2014 Always-On servers with a single template) and Azure Operational Insights for online monitoring. Furthermore there is Windows Azure Pack for managing Private clouds, Azure RemoteApp for global access to business applications, and Enterprise Mobility Suite for mobile management.

Last but not least there is Windows Phone Feature Pack for 8.x devices, which offers a richer policy set (and many more), Microsoft Identity Manager offers self-service identity management for users, and Azure AD Application Proxy offers access to on-premises applications from the cloud. Microsoft is doing a great job here! On both Microsoft Azure and Microsoft Intune you can start a 30-day trial to have a look at functionality. Just start it today and decide what it can do for your business/organization.

Some notes on Azure and RemoteApp:

-At the moment there are 19 Azure datacenter regions open for business this month (growing rapidly).

-Over 300 services spanning Compute, Storage and Networking supporting a wide spectrum of workloads!

-The new Azure portal is ready for role-based access and can be used for admins and view-only access now.

-New Azure G-family (Godzilla) systems are coming, with 32 cores, 448 GB RAM and 6,5 TB local SSD maximum!

-Azure RemoteApp offers applications on both Windows, Mac OS X, iOS, or Android. Applications runs on Windows Server in the Azure cloud, where they are easier to scale and update. Users can access the applications remotely from their Internet-connected device.

-With Windows 10 coming as One product family, One platform and One store, management is or can be done fully from the cloud.

I'm VERY excited about Microsoft’s Mobile-first, Cloud-first vision, and hope to be part of it very soon. Stay tuned for a next blogpost on new Microsoft Intune and Windows 10 features!

Friday, November 21, 2014

An error occurred while starting the task sequence (0x8007000E)

Just when you thought you seen all error messages during ConfigMgr deployment, there's a new one popping up: "An error occurred while starting the task sequence (0x8007000E)". This on multiple systems randomly showed, before task sequence is starting. When starting deployment again it's possible that it's working, or showing the error message again. That's definitely not what you want.

When looking on Microsoft TechNet i found the following post: An error occurred while starting the task sequence (0x8007000E). It mentions:

-Actually, 0x8007000e ="Not enough storage is available to complete this operation." This refers to memory (RAM), not disk storage.

-The task sequence could not save the policy to the TS Environment. The TS environment has run our of space. Your task sequence has too many apps, or too many updates, or too many steps, or too many referenced packages.

-The task sequence will try to download all polices that at targeted to the client machine. So, if you targeted updates to a collection and the client machine is a member of that collection, the task sequence will download the policy for those updates. The task sequence cannot distinguish what the policies mean unless it downloads them. All it knows is that they are meant for that client. So, if a lot of policies are targeted to the client, the task sequence will run out of environment space.

-This issue is not fixed in 2012 SP1 :-(

-One note: In SCCM 2012 R2, the XML max size goes up to 32MB. So, you can have over 3 times as many deployments in R2 without hitting that barrier. (That's the sales pitch for R2 today) I still recommend that you break your WSUS deployments out. I'm seeing much better SQL performance by doing this.

-Deleting the old software update groups, and clean up WSUS then restarting the server worked for me too.

Long story short, I found 66 Software Update Groups (SUGs) in ConfigMgr, for only 4 Automatic Deployment Rules. Most has lots of expired and superseded updates. I deleted most of them, and leave only the SUGs needed. You can choose "Add to an existing SUG" instead of "Create a new SUG" for that and remove all old SUGs left. After that deployment was working immediately again!

Another reason for me to put updates in an existing SUG always! That's better for overview, keeping ConfigMgr fast/clean, and less deployment issues too. Problem solved ;)

When looking on Microsoft TechNet i found the following post: An error occurred while starting the task sequence (0x8007000E). It mentions:

-Actually, 0x8007000e ="Not enough storage is available to complete this operation." This refers to memory (RAM), not disk storage.

-The task sequence could not save the policy to the TS Environment. The TS environment has run our of space. Your task sequence has too many apps, or too many updates, or too many steps, or too many referenced packages.

-The task sequence will try to download all polices that at targeted to the client machine. So, if you targeted updates to a collection and the client machine is a member of that collection, the task sequence will download the policy for those updates. The task sequence cannot distinguish what the policies mean unless it downloads them. All it knows is that they are meant for that client. So, if a lot of policies are targeted to the client, the task sequence will run out of environment space.

-This issue is not fixed in 2012 SP1 :-(

-One note: In SCCM 2012 R2, the XML max size goes up to 32MB. So, you can have over 3 times as many deployments in R2 without hitting that barrier. (That's the sales pitch for R2 today) I still recommend that you break your WSUS deployments out. I'm seeing much better SQL performance by doing this.

-Deleting the old software update groups, and clean up WSUS then restarting the server worked for me too.

Long story short, I found 66 Software Update Groups (SUGs) in ConfigMgr, for only 4 Automatic Deployment Rules. Most has lots of expired and superseded updates. I deleted most of them, and leave only the SUGs needed. You can choose "Add to an existing SUG" instead of "Create a new SUG" for that and remove all old SUGs left. After that deployment was working immediately again!

Another reason for me to put updates in an existing SUG always! That's better for overview, keeping ConfigMgr fast/clean, and less deployment issues too. Problem solved ;)

Wednesday, November 19, 2014

Clearing Duplicate Firmware Objects in UEFI BIOS (resolved)

When deploying physical or virtual systems with a UEFI BIOS, at every deployment there will be a bootmgfw.efi file created. This is bad for two reasons: (1) there will be lot of files in the bootstore after a few deployments, (2) on Hyper-V Generation 2 VM's this will be top file in boot order, so PXE boot isn't active at next deployment. That way you need to "Move up" the Network adapter after each deployment. In this blogpost I describe how to edit the bootstore and remove the EFI files. Not that easy if you ask me. At the moment it's not clear to me is this' a bug, or choosen by default?

Warning: Removing entries in the bootstore can disrupt your VM. This because besides of Windows Boot Manager items (bootmgfw.efi) EFI SCSI and Network devices (disk, network) can be removed also!

Let's have a look at the steps needed to free up boot store, these are coming from http://jeff.squarecontrol.com/archives/184 but there's an error in it:

To view these duplicate entries, use the command: bcdedit /enum firmware

1. Save a copy of the current BCD system store by running the following command: bcdedit /export newbcd

2. Make another backup of the system store, just in case: copy newbcd bcdbackup

3. Enumerate the firmware namespace objects in the BCD system store, saving to a text file: bcdedit /enum firmware > enumfw.txt

4. Open the enumfw.txt file in Notepad, and delete all lines except those with firmware GUIDs. Delete the {fwbootmgr} and {bootmgr} lines as well – you only want the GUIDs.

5. Rename the edited enumfw.txt file to a command file called enumfw.cmd.

6. Insert the following BCDEDIT command in front of each identifier in the enumfw.cmd file: bcdedit /store newbcd /delete

Let's wait here because the command mentioned is not right. Because of an error you get the message: "The boot configuration data store could not be opened". This because for two reasons: (1) the QUIDs mentioned must be within double-quotes, (2) the /f qualifier is missing (optional).

When using both double-quotes and /f qualifier in the end it's working fine.

No error message this time: "The operation completed successfully"! Let's start edit the bootstore and remove the EFI files furthermore.

7. Add the following command to the end of the enumfw.cmd file, then save it: bcdedit /import newbcd /clean

Note: The /import /clean option deletes all NVRAM entries and then re-initializes NVRAM based on the firmware namespace objects in the newbcd BCD store.

8.Run the enumfw.cmd file and reboot the system afterwards (optional). This time it will be working fine.

Use bcdedit /enum firmware to verify that the extra entries are gone. Just great that all bootmgfw.efi files are removed now!

Still I would like to know is this' a bug, or choosen by default? In this case I'm using around 10 Virtual Machines (used as Microsoft RDS hosts), but what to do when having many many more VM's? That seems like a lot of work to me? (to be continued)

Source:

Clearing Duplicate Firmware Objects in UEFI BIOS

bcdedit: The delete command specified is not valid

Warning: Removing entries in the bootstore can disrupt your VM. This because besides of Windows Boot Manager items (bootmgfw.efi) EFI SCSI and Network devices (disk, network) can be removed also!

Let's have a look at the steps needed to free up boot store, these are coming from http://jeff.squarecontrol.com/archives/184 but there's an error in it:

To view these duplicate entries, use the command: bcdedit /enum firmware

1. Save a copy of the current BCD system store by running the following command: bcdedit /export newbcd

2. Make another backup of the system store, just in case: copy newbcd bcdbackup

3. Enumerate the firmware namespace objects in the BCD system store, saving to a text file: bcdedit /enum firmware > enumfw.txt

4. Open the enumfw.txt file in Notepad, and delete all lines except those with firmware GUIDs. Delete the {fwbootmgr} and {bootmgr} lines as well – you only want the GUIDs.

5. Rename the edited enumfw.txt file to a command file called enumfw.cmd.

6. Insert the following BCDEDIT command in front of each identifier in the enumfw.cmd file: bcdedit /store newbcd /delete

Let's wait here because the command mentioned is not right. Because of an error you get the message: "The boot configuration data store could not be opened". This because for two reasons: (1) the QUIDs mentioned must be within double-quotes, (2) the /f qualifier is missing (optional).

When using both double-quotes and /f qualifier in the end it's working fine.

No error message this time: "The operation completed successfully"! Let's start edit the bootstore and remove the EFI files furthermore.

7. Add the following command to the end of the enumfw.cmd file, then save it: bcdedit /import newbcd /clean

Note: The /import /clean option deletes all NVRAM entries and then re-initializes NVRAM based on the firmware namespace objects in the newbcd BCD store.

8.Run the enumfw.cmd file and reboot the system afterwards (optional). This time it will be working fine.

Use bcdedit /enum firmware to verify that the extra entries are gone. Just great that all bootmgfw.efi files are removed now!

Still I would like to know is this' a bug, or choosen by default? In this case I'm using around 10 Virtual Machines (used as Microsoft RDS hosts), but what to do when having many many more VM's? That seems like a lot of work to me? (to be continued)

Source:

Clearing Duplicate Firmware Objects in UEFI BIOS

bcdedit: The delete command specified is not valid

Monday, November 17, 2014

Deploy multiple applications using Dynamic Variables in a Task Sequence

When deploying applications within a task sequence you can add 10 applications at maximum in a single step. When deploying more applications you can add another "Install application" step or choose for "Install applications according to dynamic variable list". That way you can use a single step for as many applications you want. Just configure the following steps:

-Create a collection and add Collection Variables on it. Name must be APP01, APP02, APP03 (for example) and so on. Value must be the name of the application. Add as many applications needed.

-In the task sequence add a "Install application" step and choose for "Install applications according to dynamic variable list": APP (for example). Mark "If an application installation fails, continue installing other applications in the list" when needed.

-Just make sure that on every application used, "Allow this application to be installed from the Install Application task sequence action without being deployed" is checked.

(instead of APP you can use any name you want, as long as numbers are used. The name used in task sequence must be same.)

Deploy the task sequence on the created collection. All applications will get deployed in a sequence based on the numbering of the collection variables choosed. Just another way for installing applications ;)

In my case I'm installing around 30 applications in a single step. Not a problem at all, and very easy to configure.

More blogposts on this topic:

Deploy multiple packages using Dynamic Variables in a Task Sequence

-Create a collection and add Collection Variables on it. Name must be APP01, APP02, APP03 (for example) and so on. Value must be the name of the application. Add as many applications needed.

-In the task sequence add a "Install application" step and choose for "Install applications according to dynamic variable list": APP (for example). Mark "If an application installation fails, continue installing other applications in the list" when needed.

-Just make sure that on every application used, "Allow this application to be installed from the Install Application task sequence action without being deployed" is checked.

(instead of APP you can use any name you want, as long as numbers are used. The name used in task sequence must be same.)

Deploy the task sequence on the created collection. All applications will get deployed in a sequence based on the numbering of the collection variables choosed. Just another way for installing applications ;)

In my case I'm installing around 30 applications in a single step. Not a problem at all, and very easy to configure.

More blogposts on this topic:

Deploy multiple packages using Dynamic Variables in a Task Sequence

Veeam Availability Suite v8 - Availability for the Modern Data Center

Sponsor post

Veeam Availability Suite v8 is NOW AVAILABLE for VMware vSphere and Microsoft Hyper-V!

Veeam Availability Suite v8 delivers Availability for the Modern Data Center to enable the Always-On Business. This new suite provides recovery time and point objectives (RTPO) of < 15 minutes for ALL applications and data through:

High-speed recovery: Rapid recovery of what you want, the way you want it

Data loss avoidance: Near-continuous data protection (near-CDP) and streamlined disaster recovery

Verified protection: Guaranteed recovery of every file, application or virtual server, every time

Leveraged data: Using backup data to create an exact copy of your production environment

Complete visibility: Proactive monitoring and alerting of issues before they result in operational impact

Veeam Availability Suite v8 is NOW AVAILABLE for VMware vSphere and Microsoft Hyper-V!

Veeam Availability Suite v8 delivers Availability for the Modern Data Center to enable the Always-On Business. This new suite provides recovery time and point objectives (RTPO) of < 15 minutes for ALL applications and data through:

High-speed recovery: Rapid recovery of what you want, the way you want it

Data loss avoidance: Near-continuous data protection (near-CDP) and streamlined disaster recovery

Verified protection: Guaranteed recovery of every file, application or virtual server, every time

Leveraged data: Using backup data to create an exact copy of your production environment

Complete visibility: Proactive monitoring and alerting of issues before they result in operational impact

Thursday, November 13, 2014

Webinar: Maximize the ROI of your private cloud

Sponsor post

This upcoming week, you can join Savision’s complimentary webinars on the cloud. Find out how you can minimize unplanned downtime and know when cloud resources are going to be exhausted long before it happens. Get familiar with Savision’s Cloud Capacity Management solution- Cloud Reporter- and Savision’s free tuning and optimization solution- Cloud Advisor. They both featured a new release during TechEd EU. The newest release of both solutions adds VMware support. Register for the webinar now. The webinar will be hosted by Savision’s VP of R&D, Steven Dwyer.

Here are the links to use for each webinar:

Tuesday, Nov 18, 2014 11:00 am EDT/ 17:00 pm CEST http://bit.ly/1pN01Yr

Thursday, Nov 20, 2014 9:30 am EDT/ 15:30 pm CEST

http://bit.ly/1zhkkx5

The webinar is specially designed to highlight the new features and walk you through the many benefits of using Cloud Reporter & Cloud Advisor to monitor your cloud!

This upcoming week, you can join Savision’s complimentary webinars on the cloud. Find out how you can minimize unplanned downtime and know when cloud resources are going to be exhausted long before it happens. Get familiar with Savision’s Cloud Capacity Management solution- Cloud Reporter- and Savision’s free tuning and optimization solution- Cloud Advisor. They both featured a new release during TechEd EU. The newest release of both solutions adds VMware support. Register for the webinar now. The webinar will be hosted by Savision’s VP of R&D, Steven Dwyer.

Here are the links to use for each webinar:

Tuesday, Nov 18, 2014 11:00 am EDT/ 17:00 pm CEST http://bit.ly/1pN01Yr

Thursday, Nov 20, 2014 9:30 am EDT/ 15:30 pm CEST

http://bit.ly/1zhkkx5

The webinar is specially designed to highlight the new features and walk you through the many benefits of using Cloud Reporter & Cloud Advisor to monitor your cloud!

Labels:

Cloud Advisor,

Cloud Reporter,

Savision,

Sponsor post

MDT 2013 - Deploy Multiple Windows versions with a single Deploymentshare

When deploying an Windows image in MDT 2013 you can add rules in CustomSettings.ini to offer a product key during deployment (for example). Settings in this ini file are used to create the unattend file, which is needed during mini-setup. Just add the following rules for skipping the product key:

-SkipProductKey=YES

-ProductKey=AAAAA-BBBBB-CCCCC-DDDDD-EEEEE

When using multiple Windows versions however, this will not do the job. In that case add the product key in the deployment task sequence(s). Just add it at start in the task sequence, beneath the Initialization phase. Just name the variable ProductKey and enter a KMS Client Setup Key (for example). It's just that simple ;)

When looking for KMS Client Setup Keys have a look here: http://technet.microsoft.com/en-us/library/jj612867.aspx

Just love MDT because of simplicity and functionality!

-SkipProductKey=YES

-ProductKey=AAAAA-BBBBB-CCCCC-DDDDD-EEEEE

When using multiple Windows versions however, this will not do the job. In that case add the product key in the deployment task sequence(s). Just add it at start in the task sequence, beneath the Initialization phase. Just name the variable ProductKey and enter a KMS Client Setup Key (for example). It's just that simple ;)

When looking for KMS Client Setup Keys have a look here: http://technet.microsoft.com/en-us/library/jj612867.aspx

Just love MDT because of simplicity and functionality!

Wednesday, November 12, 2014

Xian Network Manager SP3 has been officially released now!

Sponsor post

Today Jalasoft has released the latest version for Xian Network Manager. This new version (SP3) has several internal improvements and most visible one is adding support for SFlow. Let's have a look at the improvements:

SFlow monitoring:

SFlow support has been added so Xian NM can now properly receive and filter SFlow packets along with Netflow V5 and V9, so three of the most important and used flow technologies are supported and can be processed at the same time to generate alerts, performance graphs, and reports on OpsMgr related to the content of the network traffic.

New virtual center rules:

to monitor the data storages associated to ESX hosts and virtual machines.

Environmental sensor rules for Cisco routers and ASA devices:

to monitor the voltage, temperature, battery status, and more.

IP addresses resolving for Flow:

the engine can now properly resolve any public IP address into its domain name and improve the performance to make sure no delays are happening while translating these IP addresses into something users can understand when seeing the corresponding alerts or performance counters in the OpsMgr console.

Flow monitor service:

a new service has been included in Xian NM to explicitly monitor Flow data. This improves performance and functionality since it is now possible to have multiple Flow services installed and each of them can independently monitor Flow traffic from various sources and with independent databases, queues, and performance parameters.

SDK Loader:

a new connector module and improved OpsMgr SDK communication has been implemented exclusively for OpsMgr 2012 so performance and reliability when talking to OpsMgr has been greatly improved. For backward compatibility, if you install NM on OpsMgr 2007, the previous SDK communication is loaded but if it detects OpsMgr 2012, the new one is used, therefore the name.

For more information on the new release have a look at:

http://xiansuite.blogspot.com/2014/11/flow-feature-accurate-traffic-analysis.html

Today Jalasoft has released the latest version for Xian Network Manager. This new version (SP3) has several internal improvements and most visible one is adding support for SFlow. Let's have a look at the improvements:

SFlow monitoring:

SFlow support has been added so Xian NM can now properly receive and filter SFlow packets along with Netflow V5 and V9, so three of the most important and used flow technologies are supported and can be processed at the same time to generate alerts, performance graphs, and reports on OpsMgr related to the content of the network traffic.

New virtual center rules:

to monitor the data storages associated to ESX hosts and virtual machines.

Environmental sensor rules for Cisco routers and ASA devices:

to monitor the voltage, temperature, battery status, and more.

IP addresses resolving for Flow:

the engine can now properly resolve any public IP address into its domain name and improve the performance to make sure no delays are happening while translating these IP addresses into something users can understand when seeing the corresponding alerts or performance counters in the OpsMgr console.

Flow monitor service:

a new service has been included in Xian NM to explicitly monitor Flow data. This improves performance and functionality since it is now possible to have multiple Flow services installed and each of them can independently monitor Flow traffic from various sources and with independent databases, queues, and performance parameters.

SDK Loader:

a new connector module and improved OpsMgr SDK communication has been implemented exclusively for OpsMgr 2012 so performance and reliability when talking to OpsMgr has been greatly improved. For backward compatibility, if you install NM on OpsMgr 2007, the previous SDK communication is loaded but if it detects OpsMgr 2012, the new one is used, therefore the name.

For more information on the new release have a look at:

http://xiansuite.blogspot.com/2014/11/flow-feature-accurate-traffic-analysis.html

Labels:

Jalasoft,

Sponsor post,

Xian,

Xian Network Manager,

Xian NM

Sysprep and Capture task sequence fails when capture a Windows 8.x image

Last week I created a Windows 8.1 Update 1 image with MDT 2013. During the Sysprep and Capture proces, the following error message came up. Time to take some action if you ask me! ;)

Looking on Microsoft TechNet the following answer was found:

This problem occurs because the LTIApply.wsf script fails to check for the existence of the boot folder on the system partition before the script runs the takeown.exe command to change ownership on the folder. The takeown.exe command fails with a "Not Found" error if the boot folder doesn't exist. This causes the Sysprep and Capture task sequence to fail.

For the workaround have a look here: https://support.microsoft.com/kb/2797676?wa=wsignin1.0

Great it works that way!

Looking on Microsoft TechNet the following answer was found:

This problem occurs because the LTIApply.wsf script fails to check for the existence of the boot folder on the system partition before the script runs the takeown.exe command to change ownership on the folder. The takeown.exe command fails with a "Not Found" error if the boot folder doesn't exist. This causes the Sysprep and Capture task sequence to fail.

For the workaround have a look here: https://support.microsoft.com/kb/2797676?wa=wsignin1.0

Great it works that way!

Monday, November 10, 2014

How to deploy a Windows Image on UEFI-based Computers

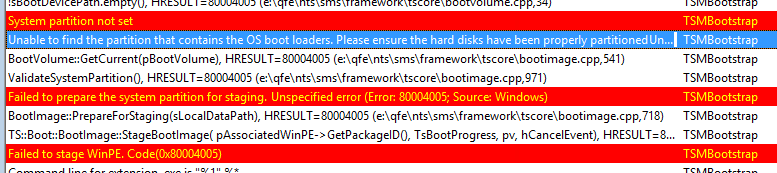

In an earlier post I described how to deploy a Windows Image on UEFI-based Computers using PXE boot. This post can be found here: Blogpost. This time I want to deploy a Windows image on a Hyper-V Generation 2 VM using ConfigMgr boot media. Because Generation 2 is using UEFI and Secureboot, deployment is not working by default. Let's have a look at some errors I see when starting deployment from a 64-bit boot image.

-Unable to find a raw disk that could be partitioned as the system disk.

-Failed to prepare the system partition for staging. The system cannot find the drive specified. (Error: 8007000F; Source: Windows)

-Failed to stage WinPE. Code(0x8007000F)

-System partition not set.

-Unable to find the partition that contains the OS boot loaders. Please ensure the hard disks have been properly partitioned.

-Failed to prepare the system partition for staging. Unspecified error (Error: 80004005; Source: Windows)

-Failed to stage WinPE. Code(0x80004005)

Trick is you must disable Secureboot and create UEFI partitions yourself. Just turn Secureboot off in properties and when ConfigMgr boot media is started press F8 and create partitions yourself.

Comment 8-4-2016: Create a diskpart script and add it into your USB/ISO (not PXE) media, if you don't want to type it manually. Press F8 and start your script with: diskpart /s filename.txt (Source)

More blogposts on this topic:

PXE Boot files in RemoteInstall folder explained (UEFI)

-Unable to find a raw disk that could be partitioned as the system disk.

-Failed to prepare the system partition for staging. The system cannot find the drive specified. (Error: 8007000F; Source: Windows)

-Failed to stage WinPE. Code(0x8007000F)

-System partition not set.

-Unable to find the partition that contains the OS boot loaders. Please ensure the hard disks have been properly partitioned.

-Failed to prepare the system partition for staging. Unspecified error (Error: 80004005; Source: Windows)

-Failed to stage WinPE. Code(0x80004005)

Trick is you must disable Secureboot and create UEFI partitions yourself. Just turn Secureboot off in properties and when ConfigMgr boot media is started press F8 and create partitions yourself.

- Diskpart

- Select disk 0 (0 being the disk to setup)

- Clean (wipe the disk)

- Convert gpt (convert disk to GPT)

- Create partition efi size=200 (EFI system partition)

- Assign letter=s (Any allowable letter)

- Format quick fs=FAT32 (Format the ESP)

- Create partition msr size=128 (Create the MSR partition)

- Create partition primary (Create Windows partition)

- Assign letter=c

- Format quick fs=NTFS (Format primary partition)

- Exit

Comment 8-4-2016: Create a diskpart script and add it into your USB/ISO (not PXE) media, if you don't want to type it manually. Press F8 and start your script with: diskpart /s filename.txt (Source)

More blogposts on this topic:

PXE Boot files in RemoteInstall folder explained (UEFI)

Labels:

0x80004005,

0x8007000F,

80004005,

8007000F,

BIOS,

EFI,

PXE,

PXE Boot,

RemoteInstall,

UEFI,

WDS,

wdsmgfw.efi,

wdsnbp.com

Wednesday, November 5, 2014

My personal experience with HP ThinShell for Kiosk Mode

Because my mainly focus is on endpoints, I'm doing a lot with fat, thin and virtual clients and mobile devices. This time I want to mention HP thin clients, which have some nice software onboard by default. There is Cloud Connection Manager, ThinShell and Universal Write Filter (UWF) for example. ThinShell is a client automation tool that enables Kiosk Mode (shell replacement) functionality for standard users (non-administrators).

Features of HP ThinShell include the following:

-You can choose to customize and use the built-in ThinShell interface or specify an entirely different shell program.

-Using your administrator credentials, you can customize the ThinShell interface and settings from within a standard user account.

-ThinShell can be used in conjunction with Cloud Connection Manager to simplify Kiosk Mode deployments for multiple standard users.

Download can be done here (64-bit or 32-bit)

Installation is very easy, and on HP thin clients it's already there.

After reboot you will see changes immediately! It's like ThinKiosk but then integrated by default. Very nice to see some progress here.

When using ThinShell you can setup thin clients very easy. Just use HP Device Manager (or ConfigMgr) for capturing, deploying and managing thin clients afterwards.

Features of HP ThinShell include the following:

-You can choose to customize and use the built-in ThinShell interface or specify an entirely different shell program.

-Using your administrator credentials, you can customize the ThinShell interface and settings from within a standard user account.

-ThinShell can be used in conjunction with Cloud Connection Manager to simplify Kiosk Mode deployments for multiple standard users.

Download can be done here (64-bit or 32-bit)

Installation is very easy, and on HP thin clients it's already there.

|

| User interface, choose buttons |

|

| Applications for shell replacement |

|

| Control Panel items available |

|

| Default website to show |

|

| Default behavior when process stops |

After reboot you will see changes immediately! It's like ThinKiosk but then integrated by default. Very nice to see some progress here.

When using ThinShell you can setup thin clients very easy. Just use HP Device Manager (or ConfigMgr) for capturing, deploying and managing thin clients afterwards.

Monday, November 3, 2014

Microsoft Surface Pro 3 first experience

Last week my new work device was delivered, a Microsoft Surface Pro 3. I decided to order one because of great look & feel, very good feedback (reviews) and Windows 10 in pipeline. In the past I did have a Surface (1) RT, but that was not actually what I wanted. This device however is a real notebook killer, no need to have a notebook next to this one. Great to experience the whole Windows look & feel, with touchscreen and pen functionality. With Windows 10 in pipeline this will become even better! Really happy with my choice here ;)

Because Surface Pro 3 is delivered with Windows 8.1 Pro, and Enterprise is needed for Direct Access functionality, I upgraded my device. Just start an inplace upgrade, so no need to format or remove anything. After the upgrade type in the new Windows Enterprise key and you're done! Just leave the recovery partition inplace, so when there's something wrong you can start a rollback. I did that once, so just leave it when needed sometime. After the upgrade however I did not see Windows 8.1 Update 1 features setup. This will be done at a later moment, when more software updates are installed.

When the power button on the start menu is needed, use registry instead. For it seems the power button is not displayed always, given the fact the operating system can be used in desktop or tablet mode. Just start registry editor and browse to "HKEY_CURRENT_USER > Software > Microsoft > Windows > CurrentVersion > ImmersiveShell". Expand the tree and create a new key called "Launcher". Create a new DWORD (32-bit) value here called "Launcher_ShowPowerButtonOnStartScreen". Give it a value of "1" to activate it and start the device again. Now it will be visible at once.

Nice thing that I worked whole day with type cover and pen. No mouse? Actually I didn't miss it today. With the pen you can do all daily operations also, without the need for a mouse. Curious if I switch back to a mouse or using the pen instead. Time will tell ;)

Can't wait for Windows 10 to complete my Surface experience! Expect more to come in a few weeks, when I did more on my device!

-My personal experience with Windows 10 Technical Preview

-Windows 10 Technical Preview updated with 7,000 changes and fixes

When the pen isn't working right (open OneNote with a single click and Screen Capture with a double click) check these guides too:

-Quick Things to Try If Your Surface Pro 3 Pen Doesn’t Work

-Deploying Surface Pro 3 Pen and OneNote Tips

-Troubleshoot Surface Pen

Because Surface Pro 3 is delivered with Windows 8.1 Pro, and Enterprise is needed for Direct Access functionality, I upgraded my device. Just start an inplace upgrade, so no need to format or remove anything. After the upgrade type in the new Windows Enterprise key and you're done! Just leave the recovery partition inplace, so when there's something wrong you can start a rollback. I did that once, so just leave it when needed sometime. After the upgrade however I did not see Windows 8.1 Update 1 features setup. This will be done at a later moment, when more software updates are installed.

When the power button on the start menu is needed, use registry instead. For it seems the power button is not displayed always, given the fact the operating system can be used in desktop or tablet mode. Just start registry editor and browse to "HKEY_CURRENT_USER > Software > Microsoft > Windows > CurrentVersion > ImmersiveShell". Expand the tree and create a new key called "Launcher". Create a new DWORD (32-bit) value here called "Launcher_ShowPowerButtonOnStartScreen". Give it a value of "1" to activate it and start the device again. Now it will be visible at once.

Nice thing that I worked whole day with type cover and pen. No mouse? Actually I didn't miss it today. With the pen you can do all daily operations also, without the need for a mouse. Curious if I switch back to a mouse or using the pen instead. Time will tell ;)

Can't wait for Windows 10 to complete my Surface experience! Expect more to come in a few weeks, when I did more on my device!

-My personal experience with Windows 10 Technical Preview

-Windows 10 Technical Preview updated with 7,000 changes and fixes

When the pen isn't working right (open OneNote with a single click and Screen Capture with a double click) check these guides too:

-Quick Things to Try If Your Surface Pro 3 Pen Doesn’t Work

-Deploying Surface Pro 3 Pen and OneNote Tips

-Troubleshoot Surface Pen

Subscribe to:

Comments (Atom)